Depending on the requirements, you can do well with Helidon, Piranha, or Hammock.

Jakarta EE (formerly Java EE) and the concept of an application server have been intertwined for so long that it’s generally thought that Jakarta EE implies an application server. This article will look at whether that’s still the case—and, if Jakarta EE isn’t an application server, what is it?

Let’s start with definitions. The various Jakarta EE specifications use the phrase application server but don’t specifically define it. The phrase is often used in a way where it would be interchangeable with terms such as runtime, container, or platform. For instance, the specification documents from the following specs mention things like the following:

◉ Jakarta authorization: “The Application server must bundle or install the PolicyContext class…”

◉ Jakarta messaging: “A ServerSessionPool object is an object implemented by an application server to provide a pool of ServerSession objects…”

◉ Jakarta connectors: “This method is called by an application server (that is capable of lazy connection association optimization) in order to dissociate a ManagedConnection…”

The Jakarta EE 9 platform specification doesn’t explicitly define an application server either, but section 2.12.1 does say the following:

A Jakarta EE Product Provider is the implementor and supplier of a Jakarta EE product that includes the component containers, Jakarta EE platform APIs, and other features defined in this specification. A Jakarta EE Product Provider is typically an application server vendor, a web server vendor, a database system vendor, or an operating system vendor. A Jakarta EE Product Provider must make available the Jakarta EE APIs to the application components through containers.

The term container is equivalent to engine, and very early J2EE documents speak about the “Servlet Engine.”

Thus, the various specification documents do not really specify what an application server is, and when they do mention it, it’s basically the same as a container or runtime. In practice, when someone speaks about an application server, this means something that includes all of the following:

◉ It is separately installed on the server or virtual machine.

◉ It listens to networking ports after it is started (and typically includes an HTTP server).

◉ It acts as a deployment target for applications (typically in the form of a well-defined archive), which can be both deployed and undeployed.

◉ It runs multiple applications at the same time (typically weakly isolated from each other in some way).

◉ It has facilities for provisioning resources (such as database connections, messaging queues, and identity stores) for the application to consume.

◉ It contains a full-stack API and the API’s implementation for consumption by applications.

In addition, an application server may include the following:

◉ A graphical user interface or command-line interface to administer the application server

◉ Facilities for clustering (so load and data can be distributed over a network)

Pushback against the full-fledged application server

The application server model has specific advantages when shrink-wrapped software needs to be deployed into an organization and integrated with other software running there. In such situations, for example, an application server can eliminate the need for users to authenticate themselves with every application. Instead, the application server might use a central Lightweight Directory Access Protocol (LDAP) service as its identity store for employees, allowing applications running on the application server to share that service.

This model, however, can be less ideal when an organization develops and operates its own public-facing web applications. In that case, the application needs to exert more control. For instance, instead of using the same LDAP service employees use, the organization’s customers would have a separate registration system and login screen implemented by the developers.

Here, an application server can be an obstacle because part of the security would need to be done outside of the application, often by an IT operations (Ops) team, who may not even know the application developers.

For example, an application server that hosts production applications is often shielded from the developer (Dev) team and is touched by only the Ops team. However, some problems on the server really belong in the Dev domain because they apply to in-house application development. This can lead to tickets that bounce between the Dev and Ops teams as the Dev team tries to steer the actions that only the Ops team is allowed to perform. (Note: The DevOps movement is an attempt to solve this long-standing problem.)

Another issue concerns the installed libraries within the application server. The exact versions of these the libraries, and the potential need to patch or update them, is often a Dev concern. For instance, the Ops team manages routers, networks, Linux servers, and so on and that team might not be very aware of the intricate details of Mojarra 2.3.1 versus Mojarra 2.3.2 or the need to patch the FacesServlet implementation.

Modern obsolescence: Docker and Kubernetes

The need for having an installed application server as the prime mechanism to share physical server resources started to diminish somewhat with the rise of virtual servers. A decade ago, you might see teams deploying a single application to a single dedicated application server running inside a virtual server. This practice, though, was uncommon enough that in 2013 the well-known Java EE consultant Adam Bien wrote a dedicated blog post about this practice that received some pushback. One of the arguments against Bien’s idea was that running an entire (virtual) operating system for a single application would waste resources.

Almost exactly at the same time as Bien wrote his post, the Docker container platform was released. Containers run on a single operating system and, therefore, largely take the resource-wasting argument away. While containers themselves had been around since the 1970s, Docker added a deployment model and a central repository for container images (Docker Hub) that exploded in popularity.

With a deployment tool at hand, and the knowledge that fewer resources are wasted, deploying an application server running a single application finally went mainstream. Specifically, the native deployment feature and, above all, the undeployment feature of an application server are not really used, because most Docker deployments are static.

In 2015 the Kubernetes container orchestration system appeared, and it quickly became popular for managing many instances of (mostly) Docker containers. For Java EE application servers, Kubernetes means that Java EE’s native clustering facilities are not really used, because tasks such as load balancing are now managed by Kubernetes.

Around the same time, the serverless microservices execution model became more popular with cloud providers. This meant that the deployment unit didn’t need its own HTTP server. Instead, the deployment unit contained code that is called by the serverless server of the cloud provider. The result was that for such an environment, the built-in HTTP server of a Java EE or Jakarta EE application server is not needed anymore. Obviously, such code needs to provide an interface the cloud provider can call. There’s currently no standard for this, though Oracle is working with the Cloud Native Computing Foundation on a specification for this area.

The Jakarta EE APIs

Without deployments, without running multiple applications, without an HTTP server, and without clustering, a Jakarta EE application server is essentially reduced to the Jakarta EE APIs.

Interestingly, this is how the Servlet API (the first Java EE API) began. In its early versions, servlet containers had no notion of a deployed application archive and they didn’t have a built-in HTTP server. Instead, servlets were functions that were individually added to a servlet container, which was then paired with an existing HTTP server.

Despite some initial resistance from within the Java EE community, the APIs that touched the managed-container application server model started to transition to a life outside the application server. This included HttpServletRequest#login, which began the move away from the strict container-managed security model, and @DataSourceDefinition, which allowed an application to define the same type of data source (and connection pool) that before could be defined only on the application server.

In Java EE 8 (released in 2017), application security received a major overhaul with Jakarta Security, which had an explicit goal of making security fully configurable without any application server specifics.

For application servers, several choices are available, such as Oracle WebLogic Server.

What if you’re not looking for a full application server? Because there is tremendous value in the Jakarta EE APIs themselves, several products have sprung up that are not application servers but that do provide Jakarta EE APIs. Among these products are Helidon, Piranha Cloud, and Hammock, which shall be examined next.

Project Helidon

Project Helidon is an interesting runtime that’s not an application server. Of the three platforms I’ll examine, it’s the only one fully suitable for production use today.

Helidon is from Oracle, which is also known for Oracle WebLogic Server—one of the first application servers out there (going back all the way to the 1990s) and one that best embodies the application server concept.

Helidon is a lightweight set of libraries that doesn’t require an application server. It comes in two variants: Helidon SE and Helidon MP (MicroProfile).

Helidon SE. Helidon SE does not use any of the Servlet APIs but instead uses its own lightweight API, which is heavily inspired by Java functional programming. The following shows a very minimal example:

WebServer.create(

Routing.builder()

.get(

"/hello",

(request, response) -> response.send("Hi!"))

.build())

.start();

Here’s an example that uses a full handler class, which is somewhat like using a servlet.

WebServer.create(

Routing.builder()

.register(

"/hello",

rules -> rules.get("/", new HelloHandler()))

.build())

.start();

public class HelloHandler implements Handler {

@Override

public void accept(ServerRequest req, ServerResponse res) {

res.send("Hi");

}

}

In the Helidon native API, many types are functional types (one abstract method), so despite a class being used for the Handler in this example to contrast it to a servlet, a lambda could have been used. By default, Helidon SE launches an HTTP server in these examples at a random port, but it can be configured to use a specific port as well.

Helidon MP. With Helidon MP, you declare a dependency to Helidon in the pom.xml file and add it to the Helidon parent POM. You then write normal MicroProfile/Jakarta EE code (for the Jakarta EE libraries that Helidon MP supports). After the build, you get a runnable JAR file including your code. A minimal example for such a pom.xml file looks as follows:

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi=

"http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation=

"http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>io.helidon.applications</groupId>

<artifactId>helidon-mp</artifactId>

<version>2.2.2</version>

<relativePath />

</parent>

<groupId>com.example</groupId>

<artifactId>example</artifactId>

<version>1.0</version>

<dependencies>

<dependency>

<groupId>io.helidon.microprofile.bundles</groupId>

<artifactId>helidon-microprofile</artifactId>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-dependency-plugin</artifactId>

<executions>

<execution>

<id>copy-libs</id>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

This runnable JAR file neither contains nor loads the Helidon libraries. Instead, those libraries are referenced via META-INF/MANIFEST.MF to be in the /libs folder relative to where the executable JAR file exists.

After building, such as by using the mvn package, you can run the generated JAR file using the following command:

java -jar target/example.jar

This command will start the Helidon server, and this time you do get a default port at 8080. Besides the MicroProfile APIs, which is what Helidon focuses on primarily, the following Jakarta EE APIs are supported:

◉ Jakarta CDI: Weld

◉ Jakarta JSON-P/B: Yasson

◉ Jakarta REST: Jersey

◉ Jakarta WebSockets: Tyrus

◉ Jakarta Persistence: EclipseLink, Hibernate

◉ Jakarta Transactions: Narayana

Piranha Cloud

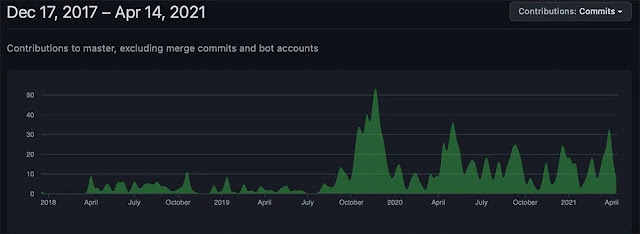

Piranha Cloud is a relatively new project; although it started as a very low-key project a couple of years ago, it didn’t pick up pace until October 2019, as you can see in Figure 1, which shows the GitHub commit graph.

0 comments:

Post a Comment