When you’re programming a chatbot, you need to understand the intent of the user’s input. Here’s how to use Stanford CoreNLP for the task.

Intent recognition is the natural language processing (NLP) task of discovering a user’s objective based on what the user typed or said. Intent recognition (also known as intent extraction or intent detection) has a wide range of practical applications such as automating the process of fulfilling the user’s needs for sales, booking, and customer service tasks.

In device-control applications, intent recognition is often used as a key step in converting a human request into a machine-executable command. For example, the intent of the spoken or typed phrase “Turn off the speaker” could be identified as the user wanting to switch off the speakers of a smart TV, and the command could be fed to the television using the speaker.switch(off) command.

There is significant commercial interest in using intent recognition for written or spoken words because the technique plays a key role in enabling human-computer interaction through natural language.

In particular, the technique is widely used in chatbots, which consist of software that imitates human-like conversations with users via written text or speech. A smart chatbot should be able to understand a user’s needs and take appropriate actions or provide requested information. Thus, a food-ordering chatbot must recognize a customer’s intent to place an order, and a question-answering chatbot must understand a user’s intent to comprehend the user’s input and then provide an appropriate answer.

Natural language is messy, inconsistent, and highly variable, and that makes intent recognition challenging. To perform this task successfully, an application needs to be good at syntax-driven sentence segmentation, part-of-speech tagging, and dependency parsing. This is where libraries such as Stanford CoreNLP can help. This article provides a simple example of how Java applications can use Stanford CoreNLP to classify user input based on what the user wants to achieve.

Syntactic dependency analysis

In NLP, the main enabling technology for intent recognition is syntactic dependency parsing, which is used to determine the relationships between words in a sentence. A syntactic dependency represents a relation between two words in a sentence, where one word is the syntactic governor (called the head or parent) and the other is the dependent (called the child). In this context, the governor relation is the inverse of the dependent relation.

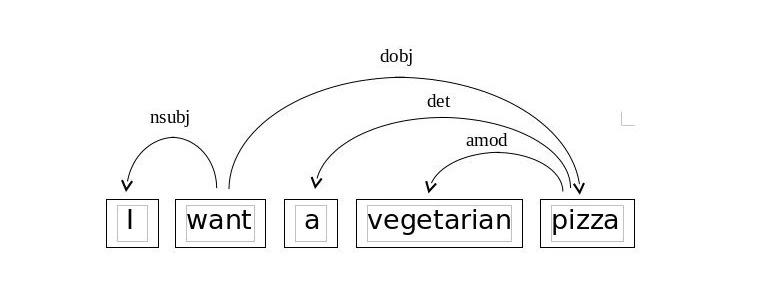

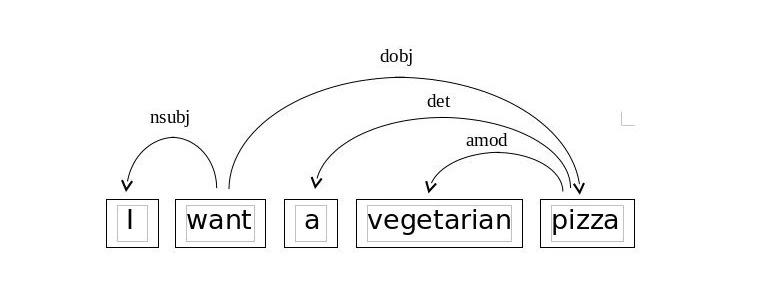

Consider the sentence, “I want a vegetarian pizza.” In the pair “want, pizza” extracted from this sentence, the word “pizza” is the child and word “want” is the head, because the latter is the verb in what’s called a verb phrase. Another syntactically related pair from this same sentence is “vegetarian pizza,” which forms a noun phrase: The noun “pizza” is the head and “vegetarian” is its child.

The syntactic dependencies of a sentence form a tree, enabling the most informative pairs of words in the sentence to be discovered programmatically. In a diagram, a syntactic dependency tree is usually depicted as a set of arched arrows, where each arrow connects the head with the child of the relation (an arrow points from the head to the child).

The syntactic dependency label of the child is used as the arc label to describe the type of syntactic relation. For example, the arc labeled with the dependency label dobj (which stands for direct object) will connect the transitive verb and the direct object of the sentence. Figure 1 illustrates what this may look like.

Figure 1. The syntactic dependency tree of a sentence

Given the example dependency tree, your task is to identify the pair that best describes the intent expressed in the sentence, so that you get just a couple of words as the sentence’s intent identifier. In general, the steps in the process of extracting a user’s intent from a sentence can be described as follows:

◉ Parse the sentence into tokens (words).

◉ Connect the tokens with labeled arcs representing syntactic relations.

◉ Navigate the arcs to extract the relevant tokens.

With libraries such as Stanford CoreNLP, the first two steps are a breeze: All you need to do is initialize some classes available in the library to obtain the objects that represent the syntactic structure of the submitted text.

The third step, however, is the responsibility of your own algorithm, which must examine the objects and determine the words (that is, the tokens) that are key for identifying the intent expressed in the sentence. In many cases, though, the task is reduced to extracting the sentence’s transitive verb and direct object as the word pair that best reflects the user’s intent.

Intent recognition with Stanford CoreNLP

Stanford CoreNLP includes the

SemanticGraph class, which is designed to represent the semantic graph of a sentence, through which you can get access to the sentence’s syntactic dependency tree. The edges of a graph are objects that represent grammatical relations in the dependency tree.

The following is a simple example of how you can take advantage of those objects to identify and then to extract the intent from a submitted sentence. First, implement a class by using a method to initialize an NLP pipeline and a method that will use this pipeline to perform sentence-by-sentence syntactic dependency parsing, identifying the intent of each sentence.

//nlpPipeline.java

import java.util.ArrayList;

import java.util.Properties;

import edu.stanford.nlp.pipeline.Annotation;

import edu.stanford.nlp.pipeline.StanfordCoreNLP;

import edu.stanford.nlp.semgraph.SemanticGraph;

import edu.stanford.nlp.semgraph.SemanticGraphEdge;

import edu.stanford.nlp.semgraph.SemanticGraphCoreAnnotations;

import edu.stanford.nlp.ling.CoreAnnotations;

import edu.stanford.nlp.util.CoreMap;

public class nlpPipeline {

static StanfordCoreNLP pipeline;

public static void init()

{

Properties props = new Properties();

props.setProperty("annotators", "tokenize, ssplit, pos, parse");

pipeline = new StanfordCoreNLP(props);

}

public static void findIntent(String text)

{

Annotation annotation = pipeline.process(text);

for(CoreMap sentence : annotation.get(CoreAnnotations.SentencesAnnotation.class))

{

SemanticGraph sg =

sentence.get(SemanticGraphCoreAnnotations.BasicDependenciesAnnotation.class);

String intent = "It does not seem that the sentence expresses an explicit intent.";

for (SemanticGraphEdge edge : sg.edgeIterable()) {

if (edge.getRelation().getLongName() == "direct object"){

String tverb = edge.getGovernor().originalText();

String dobj = edge.getDependent().originalText();

dobj = dobj.substring(0,1).toUpperCase() + dobj.substring(1).toLowerCase();

intent = tverb + dobj;

}

}

System.out.println("Sentence:\t" + sentence);

System.out.println("Intent:\t\t" + intent + "\n");

}

}

}

In the class above, the init() method is designed to initialize the dependency parser in the Stanford CoreNLP pipeline being created and also initialize the tokenizer, the sentence splitter, and the part-of-speech tagger; the annotators needed to use the parser. To implement this, the corresponding list of annotators is set and passed to the StanfordCoreNLP() constructor.

The pipeline object is then used in the findIntent() method of the class to process the text passed in. In particular, the pipeline’s process() method returns an annotation object that stores the annotations generated by all the annotators used in the pipeline.

By iterating over the annotation object created previously, you get a sentence-level CoreMap object, which is then used to build the semantic graph of the sentence. Next, in an inner loop, you iterate over the graph edges, trying to find a relation whose long name is direct object (if it exists, it connects the direct object with the transitive verb in the sentence). This example also concatenates the transitive verb and its direct object to express the intent as a single word. You could use this string as an intent identifier in later processing.

The code below invokes the findIntent() method defined in the class above, passing in a discourse you might submit to a chatbot that takes orders for a pizza restaurant.

//SentenceIntent.java

public class SentenceIntent

{

public static void main(String[] args)

{

String text = "Good evening. I want a vegetarian pizza.";

nlpPipeline.init();

nlpPipeline.findIntent(text);

}

}

After setting up Stanford CoreNLP, you can compile the nlpPipeline and SentenceIntent classes and then run SentenceIntent, as follows:

$ javac nlpPipeline.java

$ javac SentenceIntent.java

$ java SentenceIntent

The generated output should look as follows:

Sentence: Good evening.

Intent: It does not seem that the sentence expresses an explicit intent.

Sentence: I want a vegetarian pizza.

Intent: wantPizza

Source: oracle.com

0 comments:

Post a Comment