GraalVM directly supports Python and R, and this opens the world of dynamic scripting and data science libraries to your Java projects.

GraalVM is an open source project from Oracle that includes a modern just-in-time (JIT) compiler for Java bytecode, allows ahead-of-time (AOT) compilation of Java code into native binaries, provides a rich tooling ecosystem, and supports fast execution and integration of other languages.

GraalVM supports Python and R (among other languages), which opens the world of dynamic scripting and data science libraries to Java projects. Since scripting languages do not need a compilation step, applications written for GraalVM can allow users to easily extend them using any dynamic language supported by GraalVM. These user scripts are then JIT compiled and optimized with the Java code to provide optimal performance.

This article demonstrates how a Java application can be enhanced with the polyglot capabilities of GraalVM, using a Micronaut chat application as an example.

Get started with Micronaut

Micronaut is a microservice framework that works well with GraalVM. For the sake of clarity, this article will keep things simple by using one of the Micronaut examples, a simple chat app using WebSockets. This app provides chat rooms with topics. A new user can join any chat room by just browsing at http://localhost:8080/#/{topicName}/{userName}. Under the hood, the app is a WebSocket server.

Imagine you designed such an application for a customer and now this customer asks for rich, programmable customization options. Running on GraalVM, this can be very simple indeed. Look at the following message validation in the example chat app:

private Predicate<WebSocketSession> isValid(String topic) {

return s -> topic.equalsIgnoreCase(s.getUriVariables().get("topic", String.class, null));

}

Right now, the validation ensures that messages sent from one chat room are broadcast only to the users connected to the same chat room; that is, the topic that the sender is connected to matches that of the receiver.

The power of GraalVM’s polyglot API makes this more flexible! Instead of a hardcoded predicate, write a Python function that will act as a predicate. Something like this will do exactly the same.

import java

def is_valid(topic):

return lambda s: topic.lower() == s.getUriVariables().get("topic", java.lang.String, None).lower()

For testing it, put it in a static String PYTHON_SOURCE and then use it as follows:

private static final String PYTHON_SOURCE = /* ... */;

private Context polyglotContext = Context.newBuilder("python").allowAllAccess(true).build();

private Predicate<WebSocketSession> isValid(String topic) {

polyglotContext.eval("python", PYTHON_SOURCE);

Value isValidFunction = polyglotContext.getBindings("python").getMember("is_valid");

return isValidFunction.execute(topic).as(Predicate.class);

}

That’s it! So, what is happening here?

The Python code defines a new function, is_valid, which accepts an argument and defines a nested function that creates a closure over that argument. The nested function also accepts an argument, a WebSocketSession. Even though it is not typed, and that argument is a plain old Java object, you can call Java methods seamlessly from Python. Argument mapping from Python None to Java null is handled automatically by GraalVM.

Here, you’ll see that the Java code has been extended with a private context field that represents the entry point into the polyglot world of GraalVM. You just create a new context with access to the Python language in which your script runs. However, because it’s not wise to allow scripts to do whatever they want, GraalVM offers sandboxing to restrict what a dynamic language can do at runtime. (More about that will come in a future article.)

The isValid function evaluates the Python code. Python code evaluated like this is run in the Python global scope, which you can access with the getBindings method. The GraalVM API uses the Value type to represent dynamic language values to Java, with various functions to read members, array elements, and hash entries; to test properties, such as whether a value is numeric or represents a string; or to execute functions and invoke methods.

The code above retrieves the is_valid member of the global scope. This is the Python function object you have defined, and you can execute it with the topic String. The result is again of type Value, but you need a java.util.function.Predicate. GraalVM has your back here, too, and allows casting dynamic values to Java interfaces. The appropriate methods are transparently forwarded to the underlying Python object.

Wrapping Python scripts into beans

Of course, hardcoding Python code strings and manually instantiating polyglot contexts is not the proper way to structure a Micronaut app. A better approach is to make the context and engine objects available as beans, so they can be injected to other beans managed by Micronaut. Since you cannot annotate third-party classes, go about this by implementing a Factory, as follows:

@Factory

public class PolyglotContextFactories {

@Singleton

@Bean

Engine createEngine() {

return Engine.newBuilder().build();

}

@Prototype

@Bean

Context createContext(Engine engine) {

return Context.newBuilder("python")

.allowAllAccess(true)

.engine(engine)

.build();

}

}

Each instance of the GraalVM polyglot context represents an independent global runtime state. Different contexts appear as if you had launched separate Python processes. The Engine object can be thought of as a cache for optimized and compiled Python code that can be shared between several contexts and thus warmup does not have to be repeated as new contexts are created.

Micronaut supports injection of configuration values from multiple sources. One of them is application.yml, which you can use to define where the Python code lives, as follows:

micronaut:

application:

name: websocket-chat

scripts:

python-script: "classpath:scripts/script.py"

You can then access this script and expose its objects in another bean, as follows:

@Factory

@ConfigurationProperties("scripts")

public class PolyglotMessageHandlerFactory {

private Readable pythonScript;

public Readable getPythonScript() { return pythonScript; }

public void setPythonScript(Readable script) { pythonScript = script; }

@Prototype

@Bean

MessageHandler createPythonHandler(Context context) throws IOException {

Source source = Source.newBuilder("python", pythonScript.asReader(), pythonScript.getName()).build();

context.eval(source);

return context.getBindings("python").getMember("ChatMessageHandler").as(MessageHandler.class);

}

}

As before, you should expect a certain member to be exported in the Python script, but this time you are casting it to a MessageHandler interface. The following is how that is defined:

public interface MessageHandler {

boolean isValid(String sender, String senderTopic, String message, String rcvr, String rcvrTopic);

String createMessage(String sender, String message);

}

You can use this interface in the chat component.

@ServerWebSocket("/ws/chat/{topic}/{username}")

public class ChatWebSocket {

private final MessageHandler messageHandler;

// ...

public ChatWebSocket(WebSocketBroadcaster broadcaster, MessageHandler messageHandler) {

// ...

}

// ...

@OnMessage

public Publisher<String> onMessage(String topic, String username, String message, WebSocketSession s) {

String msg = messageHandler.createMessage(username, message);

// ...

}

Phew! That was a lot. But now you have the flexibility to provide different scripts in the application config, and you’ll have the polyglot context only created and the script only run as needed by the app. The contract for the Python script is simply that it must export an object with an isValid and a createMessage function to match the Java MessageHandler interface. Besides that, you can use the full power of Python in message transformations.

class ChatMessageHandler:

def createMessage(sender, msg):

match = re.match("/([^ ]+)", msg)

if match:

try:

if match.group(1) == "=":

return f"You sent a command with arguments: {msg.split()[-1]}"

else:

return "Unknown command!"

except:

return f"Error during command execution: {sys.exc_info()[0]}"

return f"[{sender}] {msg}"

def isValid(sender, senderTopic, message, receiver, receiverTopic):

return senderTopic == receiverTopic

What’s going on? This defines two functions on a class, ChatMessageHandler, to match the Java interface. The code exports the class object (not an instance!) to the polyglot bindings, so that the Java code can pick it up. The validation code is essentially the same just simplified due to different arguments.

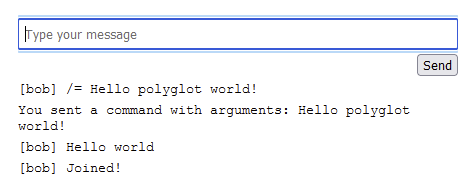

The createMessage transformation, however, is more interesting. Many chat systems treat a leading slash / specially, allowing bots or extended chat commands to be run like in a command-line interface. You can provide the same here, by matching such commands. Right now, the chat supports exactly one command, /=, which simply echoes its argument, as seen in Figure 1.