Machine learning (ML) is important because it can derive insights and make predictions using an appropriate dataset. As the amount of data being generated increases globally, so do the potential applications of ML. Specific ML algorithms can be difficult to implement, since doing so requires significant theoretical and practical expertise.

Fortunately, many of the most useful ML algorithms have already been implemented and are bundled together into packages called libraries. The best libraries for performing ML need to be identified and studied, since there are many libraries currently available.

Scikit-learn is a very well-established Python ML library widely used in industry. Tribuo is a recently open sourced Java ML library from Oracle. At first glance, Tribuo provides many important tools for ML, but there is limited published research studying its performance.

This project compares the scikit-learn library for Python and the Tribuo library for Java. The focus of this comparison is on the ML tasks of classification, regression, and clustering. This includes evaluating the results from training and testing several different models for each task.

This study showed that the new Tribuo ML library is a viable, competitive offering and should certainly be considered when ML solutions in Java are implemented.

This article explains the methodology of this work; describes the experiments which compare the two libraries; discusses the results of the experiments and other findings; and, finally, presents the conclusions. This article assumes readers have familiarity with ML’s goals and terminology.

Methodology

To make a comparison between scikit-learn and Tribuo, the tasks of classification, regression, and clustering were considered. While each task was unique, a common methodology was applicable to each task. The flowchart shown in Figure 1 illustrates the methodology followed in this work.

Figure 1. A flowchart that illustrates the methodology of this work

Identifying a dataset appropriate to the task was the logical first step. The dataset needed to be not too small, since a model should be trained with a sufficient amount of data. The dataset also needed to be not too large, to allow the models being developed to be trained in a reasonable amount of time. The dataset also needed to possess features which could be used without requiring excessive preprocessing.

This work focused on the comparison of two ML libraries, not on the preprocessing of data. With that said, it is almost always the case that a dataset will need some preprocessing.

The data preprocessing steps were completed using Jupyter notebooks, entirely in Python and occasionally using scikit-learn’s preprocessing functionality. Any required cleanup, scaling, and one-hot encoding was done during this step. Fortunately, Sebastian Raschka and Vahid Mirjalili, in

Python Machine Learning (third edition), provide several clear examples of when these types of changes to data are required.

Once the data preprocessing was complete, the data was re-exported to a comma-separated value formatted file. Having a single, preprocessed dataset facilitated the comparison of a specific ML task between the two libraries by isolating the training, testing, and evaluation of the algorithms. For example, the classification algorithms from the Tribuo Java library and the scikit-learn Python library could load exactly the same data file. This aspect of the experiments was controlled very carefully.

Choosing comparable algorithms. To make an accurate comparison between scikit-learn and Tribuo, it was important that the same algorithms were compared. This third step of defining the common algorithms for each library required studying each library to identify what algorithms are available that could be accurately compared. For example, for the clustering task, Tribuo currently only supports K-Means and K-Means++, so these were the only algorithms common to both libraries which could be compared. Furthermore, it was critical that each algorithm’s parameters were precisely controlled for each library’s specific implementation.

To continue with the clustering example, when the K-Means++ object for each library was instantiated, the following parameters were used:

◉ maximum iterations = 100

◉ number of clusters = 6

◉ number of processors to use = 4

◉ deterministic randomness for centroids = 1

The next step was to identify each library’s best algorithm for a specific ML task. This involved testing and tuning several different algorithms and their parameters to see which one performed the best. For some people, this is when ML is really fun!

Concretely, for the regression task, the random forest regressor and XGBoost regressor were found to be the best for scikit-learn and Tribuo, respectively. Being the best in this context meant to achieve the best score for the task’s evaluation metric. The process of selecting the optimal set of parameters for a learning algorithm is known as hyperparameter optimization.

A side note: In recent years, automated machine learning (AutoML) has emerged as a way to save time and effort in the process of hyperparameter optimization. AutoML is also capable of performing model selection, so in theory this entire process could be automated. However, the investigation and use of AutoML tools was out of the scope of this work.

Evaluating the algorithms. Once the preprocessed datasets were available, the libraries’ common algorithms had been defined, and the libraries’ best scoring algorithms had been identified, it was time to carefully evaluate the algorithms. This involved verifying that each library split the dataset into training and test data in an identical way but was only applicable to the classification and regression tasks. This also required writing some evaluation functions which produced similar output for both Python and Java, and for each of the ML tasks.

At this point, it should be clear that Jupyter notebooks were used for these comparisons. Because there were two libraries and three ML tasks, six different notebooks were evaluated: A single notebook was used to perform the training and testing of the algorithms for each ML task for one of the libraries. From a terminology standpoint, a notebook is also referred to as an experiment in this work.

Throughout this study many, many executions of each notebook were performed for testing, tuning, and so forth. Once everything was finalized, three independent executions of each experiment were made in a very controlled way. This meant that the test system was running only the essential applications, to ensure that a maximum amount of CPU and memory resources were available to the experiment being executed. The results were recorded directly in the notebooks.

The final step in the methodology of this work was to compare the results. This included calculating the average training times for each model’s three training times. The average of each algorithm’s three recorded evaluation metrics was also calculated. These results were all reviewed to ensure the values were consistent and no obvious reporting error had been made.

Experiments

The three ML tasks of classification, regression, and clustering are described in this section. Note that the version of scikit-learn used was 0.24.1 with Python 3.9.1. The version of Tribuo was 4.1 with Java 12.0.2. All of the experiments, the datasets, and the preprocessing notebooks are available for review online in

my GitHub repository.

Classification. The classification task of this work focused on predicting if it would rain the next day, based on a set of weather observations collected for the current day. The

Kaggle dataset used was

Rain in Australia. The dataset contained 140,787 records after preprocessing, where each record was a detailed set of weather information such as the current day’s minimum temperature and wind information. To clean up the data, features with large numbers of missing values were removed. Features which were categorical were one-hot encoded and numeric features were scaled.

Three classification algorithms common to each library were compared: stochastic gradient descent, logistic regression, and decision tree classifier. The algorithm which obtained the best score for the scikit-learn library using this data was the

multi-layer perceptron. For Tribuo, the best scoring algorithm was the

XGBoost classifier.

F1 scores were used to compare each algorithm’s ability to make correct predictions. It was the best metric to use since the dataset was unbalanced. In the test data, of a total of 28,158 records, there were 21,918 recordings with no rain and only 6,240 entries indicating rain.

Regression. The regression task studied here used a dataset containing the attributes of a used car to predict its sale price. The Kaggle dataset used was

Used-cars-catalog, and some examples of the car attributes were mileage, year, make, and model. The preprocessing effort for this dataset was minimal. Some features were dropped, and some records with empty values were removed. Only three columns were one-hot encoded. The resulting dataset contained 38,521 records.

Like the classification task described above, there were three algorithms common to scikit-learn and Tribuo which were compared: stochastic gradient descent, linear regression, and decision tree regressor. The scikit-learn library’s algorithm that achieved the best score was the

random forest regressor. The best scoring algorithm for the Tribuo library was the

XGBoost regressor. The values of

root mean square error (RMSE) and R2 score were used to evaluate the algorithms. These are common metrics used to evaluate regression tasks.

Something else is worth mentioning: In this experiment for the Tribuo library, sometimes loading the preprocessed dataset took a very long time. This issue has been fixed in the 4.2 release of Tribuo. Although data loading is not the focus of this study, poor data loading performance can be a significant problem when ML algorithms are used.

Clustering. The clustering task used a generated dataset of isotropic Gaussian blobs, which are simply sets of points normally distributed around a defined number of centroids. This dataset used six centroids. In this case, each point had five dimensions. There were a total of six million records in this dataset, making it quite large.

The benefit of using an artificial dataset like this is that a point’s assigned cluster is known, which is useful for evaluating the quality of the clustering. Otherwise, evaluating a clustering task is more difficult. Using the cluster assignments, an adjusted mutual information score is used to evaluate the clusters, which indicates the amount of correlation between the clusters.

There are only two clustering algorithms currently implemented in the Tribuo library. They are

K-Means and K-Means++. Since these algorithms are also available in scikit-learn, they could be compared. Other clustering algorithms from the scikit-learn library were tested to see if a better- or equal-scoring model could be identified. Surprisingly, there does not seem to be any other scikit-learn algorithm which can complete training within a reasonable amount of time using this large dataset.

Experimental results

Here are the results for classification, regression, and clustering.

Classification. As mentioned above, the F1 score is the best way to evaluate these classification algorithms, and an F1 score close to 1 is better than a score not close to 1. The F1 “Yes” and “No” scores for each class were included. It was important to observe both values since this dataset was unbalanced. The results in Table 1 show that the F1 scores were very close for the algorithms common to both libraries, but Tribuo’s models were slightly better.

Table 1. Classifier results using the same algorithm

Table 2 indicates that Tribuo’s XGBoost classifier obtained the best F1 scores out of all the classifiers in a reasonable amount of time. The time values used in the tables containing these results are always the algorithm training times. This work was interested in the model which achieved the best F1 scores, but there could be other situations or applications which are more concerned with model training speed and have more tolerance for incorrect predictions. For those cases, it is worth noting that the scikit-learn training times are better than those obtained by Tribuo—for the algorithms common to both libraries.

Table 2. Classifier best algorithm results

It is helpful to have a visualization focusing on these F1 scores. Figure 2 shows a stacked column chart combining each model’s F1 score for the Yes class and the No class. Again, this shows Tribuo’s XGBoost classifier model was the best.

Figure 2. A stacked column chart combining each model’s F1 scores

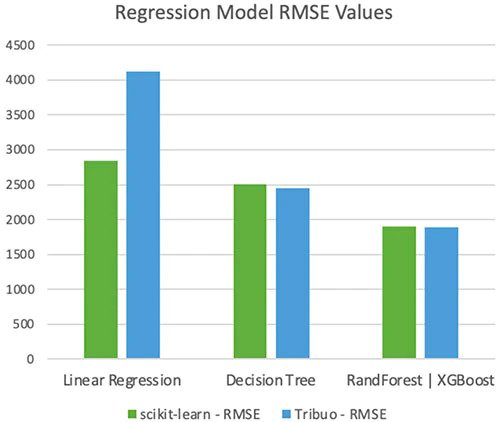

Regression. Keep in mind that a lower RMSE value is a better score than a higher RMSE value, and the R2 score closest to 1 is best.

Table 3 shows the results from the regression algorithms common to the scikit-learn and Tribuo libraries. Both libraries’ implementations of stochastic gradient descent scored very poorly, so those huge values are not included here. Of the remaining algorithms common to both libraries, scikit-learn’s linear regression model scored better than Tribuo’s linear regression model, and Tribuo’s decision tree model beat out scikit-learn’s model.

Table 3. Regressor results using the same algorithm

Table 4 shows the results for the model from each library which produced the lowest RMSE value and highest R2 score. Here, the Tribuo XGBoost regressor model achieved the best scores, which were just slightly better than the scikit-learn random forest regressor.

Table 4. Regressor results for the best algorithm

Visualizations of these tables, which summarize the scores from the regression experiments, reinforce the results. Figure 3 shows a clustered column chart of the RMSE values, while Figure 4 shows a clustered column chart of the R2 scores. The poor scoring stochastic gradient descent models are not included. Recall that the two columns on the right are comparing each library’s best scoring model, which is why the scikit-learn random forest model is side by side with the Tribuo XGBoost model.

Figure 3. A clustered column chart comparing the RMSE values

Figure 4. A clustered column chart comparing the R2 scores

Clustering. For a clustering model, an adjusted mutual information value of 1 indicates perfect correlation between clusters.

Table 5 shows the results of the two libraries’ K-Means and K-Means++ algorithms. It is not surprising that most of the models get a 1 for their adjusted mutual information value. This is a result of how the points in this dataset were generated. Only the Tribuo K-Means implementation did not achieve a perfect adjusted mutual information value. It’s worth mentioning again that although there are several other clustering algorithms available in scikit-learn, none of them could finish training using this large dataset.

Table 5. Clustering results

Additional findings

Comparing library documentation. To prepare the ML models for comparison, the scikit-learn documentation was heavily consulted. The scikit-learn documentation is outstanding. The API docs are complete and verbose, and they provide simple, relevant examples. There is also a user guide which provides additional information beyond what’s contained in the API docs. It is easy to find the appropriate information when models are being built and tested.

Good documentation is one of the main goals of the scikit-learn project. At the time of writing, Tribuo does not have an equivalent set of published documentation. The Tribuo API docs are complete and there are helpful tutorials which describe how to perform the standard ML tasks. To perform tasks beyond this requires more effort, but some hints can be found by reviewing the appropriate unit tests in the source code.

Reproducibility. There are certain situations when ML models are used where reproducibility is important. Reproducibility means being able to train or use a model repeatedly and observe the same result with a fixed dataset. This can be difficult to achieve, for example, when a model depends on a random number generator and the model has been trained several times causing several invocations of the model’s random number generator.

Tribuo provides a feature called

Provenance, which is ubiquitous throughout the library’s code. Provenance captures the details on how any dataset, model, etc. is created and has been modified in Tribuo. This information would include the number of times a model’s random number generator has been used. The main benefit it offers is that any of these objects can be regenerated from scratch, assuming the original training and testing data are used. Clearly this is valuable for reproducibility. Scikit-learn does not have a feature like Tribuo’s Provenance feature.

Other considerations. The comparisons described in this work were done using Jupyter notebooks. It is well known that Jupyter includes a Python kernel by default. However, Jupyter does not natively support Java. Fortunately, a Java kernel can be added to Jupyter using a project called IJava. The functionality provided by this kernel enabled the comparisons made in this study. Clearly, these kernels are not directly related to the libraries under study but are noted since they provided the environment in which these libraries were exercised.

The usual comment that Python is more concise than Java wasn’t really applicable in these experiments. The var keyword, which was introduced in Java 10, provides local variable type inference and reduces some boilerplate code often associated with Java. Developing functions in the notebooks still requires defining the types of the parameters since Java is statically typed. In some cases, getting the generics right requires referencing the Tribuo API docs.

Earlier, it was mentioned that the data preprocessing steps were completed entirely in Python. It is significantly easier to perform data preprocessing or data cleaning activities in Python, compared to Java, for several reasons. The primary reason is the availability of supporting libraries which offer rich data preprocessing features, such as

pandas. The quality of a dataset being used to build an ML model is so important; therefore, the ease with which data preprocessing can be performed is an important consideration.

The ML tasks of classification, regression, and clustering were the focus of the comparisons made in this work. It should be noted again that scikit-learn provides many more algorithm implementations than Tribuo for each of these tasks. Furthermore, scikit-learn offers a broader range of features, such as an API for visualizations and dimensionality reduction techniques.

Source: oracle.com

0 comments:

Post a Comment